API Endpoints / Schema

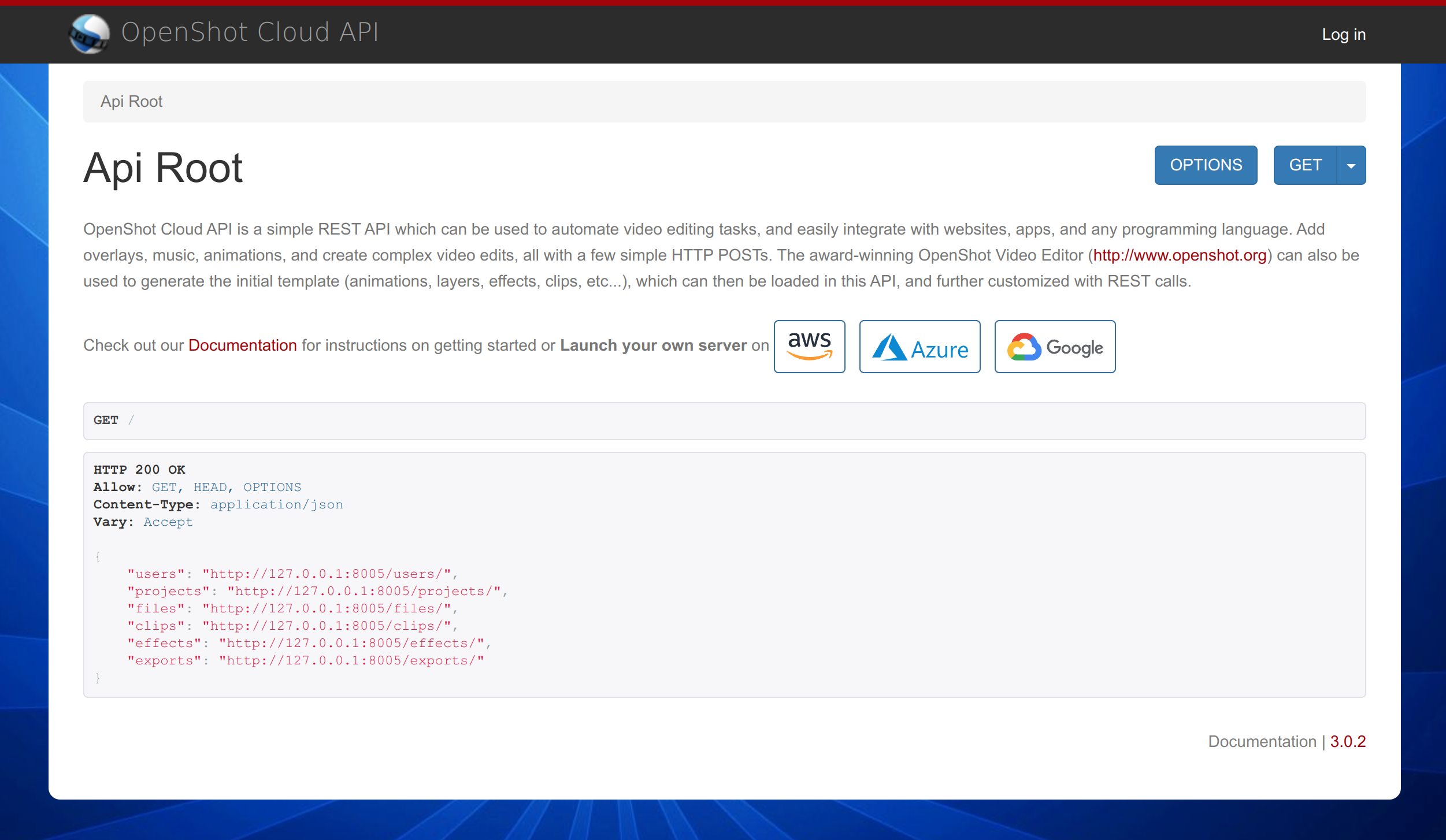

The API Endpoints for OpenShot Cloud API are discoverable and browsable on your local instance. The best way to learn and play with them is with your browser and access the interface: http://YourInstanceIP/.

To learn more about our schema, see Schema Documentation for definitions of ALL endpoints.

Authentication

Each request must be authenticated before it will be processed. OpenShot Cloud API supports multiple user accounts, each with their own credentials. You can create multiple user accounts, and data created in one account, can only be accessed by that same user account. Each user has a username, password, and token.

OpenShot Cloud API supports 3 different types of authentication.

Basic Authentication

A username and password are sent via clear text. This is fine if you are not exposing your private cloud to external clients (such as mobile or desktop apps), otherwise always use HTTPS. You can create a new user with the /cloud-admin/auth/user/ URL.

Authorization: Basic QWxhZGRpbjpPcGVuU2VzYW1l

# Python Example

r = requests.get(CLOUD_URL + end_point, auth=HTTPBasicAuth('demo-cloud', 'demo-password'))

Session Authentication

Once authenticated, a sessionid is returned. This sessionid must be included on every request until it expires. This method is used with our discoverable html interface.

Token Authentication

A token is automatically created for each user account. That token can be sent in a header instead of username and password. For clients to authenticate, the token key should be included in the Authorization HTTP header. The key should be prefixed by the string literal “Token”, with whitespace separating the two strings. This is fine if you are not exposing your private cloud to external clients (such as mobile or desktop apps), otherwise always use HTTPS. You can locate a user’s token using the /cloud-admin/authtoken/token/ URL.

Authorization: Token 9944b09199c62bcf9418ad846dd0e4bbdfc6ee4b

# Python Example

headers = {'Authorization': 'Token 9054f7aa9305e012b3c2300408c3dfdf390fcddf'}

r = requests.get(url, headers=headers)

/tokens/

API endpoint that retrieves a token for a specific user.

{

"username": "user123",

"password": "password123",

}

{

"token":"b4a2b29478ec7ecaa8e7620ga39f82ea5fbd1234"

}

Security

By default, OpenShot Cloud API requests and responses are unsecured and use port 80. If you do not need external access to the API, please close port 80 and ensure the server is only internally accessible. However, if you do need external access to the API, HTTPS is a must to protect your credentials when making requests. There are 2 basic approaches for enabling SSL for OpenShot Cloud API.

SSL (EC2 Load Balancer)

This is the recommended approach for enabling SSL for OpenShot Cloud API. Launch an EC2 Load Balancer, configure it with your SSL certificate, and connect the load balancer to your OpenShot Cloud API server instance. Use the load balancer’s DNS with HTTPS for making requests, thus keeping the OpenShot Cloud API instance internal, private, and safe. This also requires no changes to the OpenShot Cloud API instance.

SSL (Configure Apache)

This approach requires OpenShot Cloud API servers be manually configured with SSL (Apache web server). Edit the following file, and point to your specific SSL certificate files (which will need to be uploaded, put in a folder, and secured with file permissions):

nano /etc/apache2/sites-available/openshot-ssl.conf

SSLCertificateFile /etc/ssl/certs/SITENAME.crt

SSLCACertificateFile /etc/ssl/certs/SITENAME.ca-bundle

SSLCertificateKeyFile /etc/ssl/private/SITENAME.key

Once configured, restart Apache and other API services:

$ sudo supervisorctl restart all

$ sudo service apache2 restart

Now that Apache is manually configured with SSL, be sure to make all requests using HTTPS, to protect your credentials.

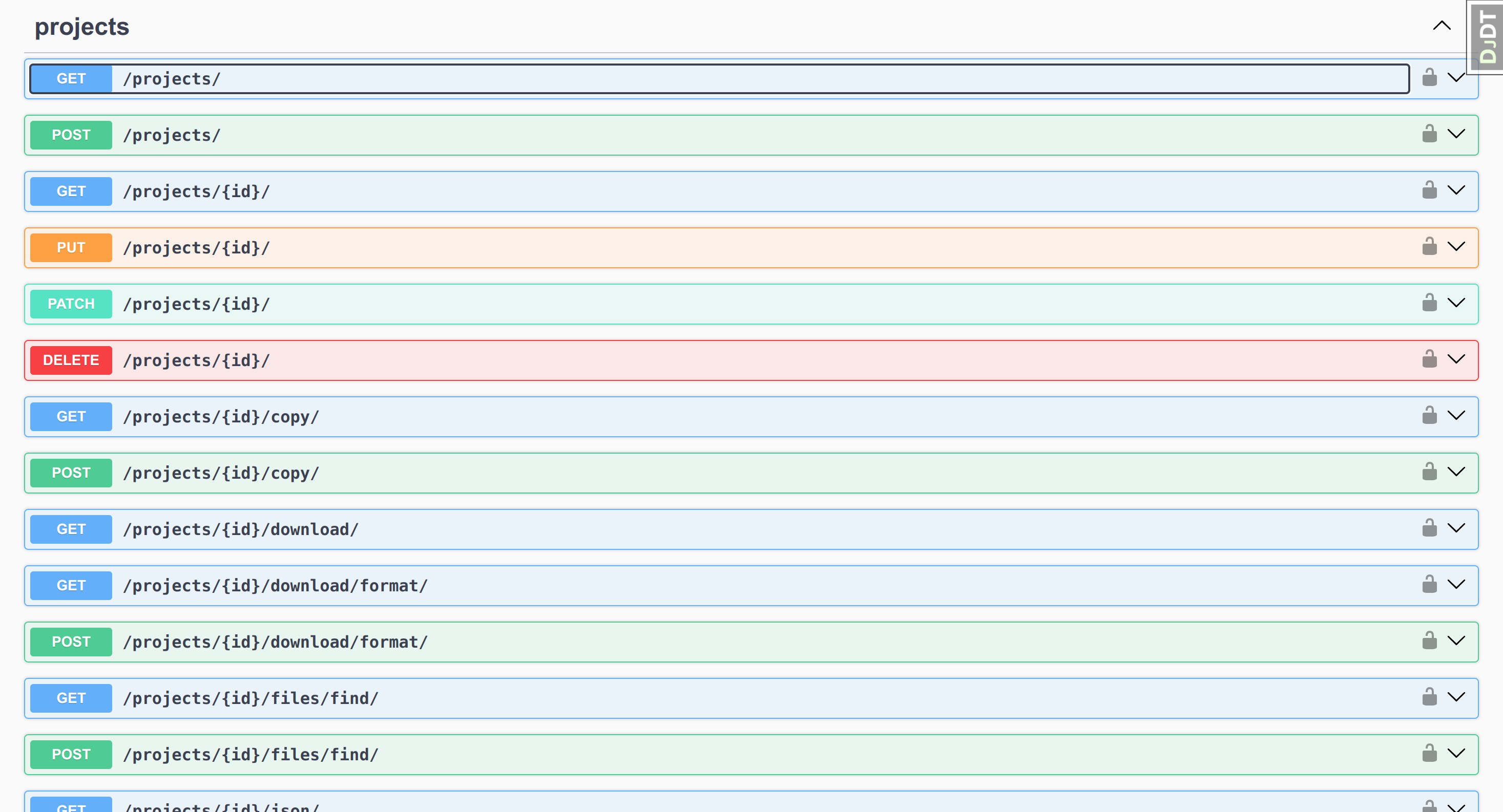

Methods

REST is a design pattern for web services modeled around HTTP requests. Each operation uses its own HTTP method:

Method |

Description |

|---|---|

GET |

Get data from the API (i.e. request a project, clip, or export) |

POST |

Create data on the API (i.e. create a project, clip, or export) |

PUT |

Update existing data from the API (i.e. update a project, clip, or export) |

PATCH |

Update partial existing data from the API (i.e. update only a Clip’s position) |

DELETE |

Delete existing data from the API (i.e. delete a project, clip, or export) |

Responses

OpenShot Cloud API uses HTTP status codes to indicate the following things:

Status Code |

Name |

|---|---|

200 |

Successful request, good job! |

400 |

Bad request, something is wrong with your request |

403 |

Forbidden, permission denied |

500 |

Server error, something broke while processing the request |

Informational responses, such as success and failure messages use the following JSON payload syntax, and include a “detail” attribute.

{

"detail": "Important success or failure message"

}

Other responses return actual JSON data structures, with no “detail” attribute.

[

{

"url": "http://127.0.0.1:8008/projects/21/",

"id": 21,

"name": "Example Project",

"width": 1920,

"height": 1080,

"fps_num": 30,

"fps_den": 1,

"sample_rate": 44100,

"channels": 2,

"channel_layout": 3,

"video_format": "mp4",

"video_codec": "libx264",

"video_bitrate": 8000000,

"audio_codec": "ac3",

"audio_bitrate": 1920000,

"files": [],

"clips": [],

"effects": [],

"json": "{}"

}

]

Pagination

Many endpoints list data in a paginated format (page by page), to keep the number of results small and fast. You can request a specific page using the following syntax: /endpoint/?page=1. The results attribute contains the list of endpoint specific data.

{

"count": 1,

"next": null,

"previous": null,

"results": []

}

Schema Documentation

A machine-readable schema describes what resources are available via the API, what their URLs are, how they are represented and what operations they support. We support OpenAPI 3.0+ (YAML) schema specification.

Built-in Demo

Visit your local instance (http://YourInstanceIP/) for a built-in interactive demo where you can invoke and test each endpoint in your browser. This is perhaps the easiest way to inspect and learn how to use the API.

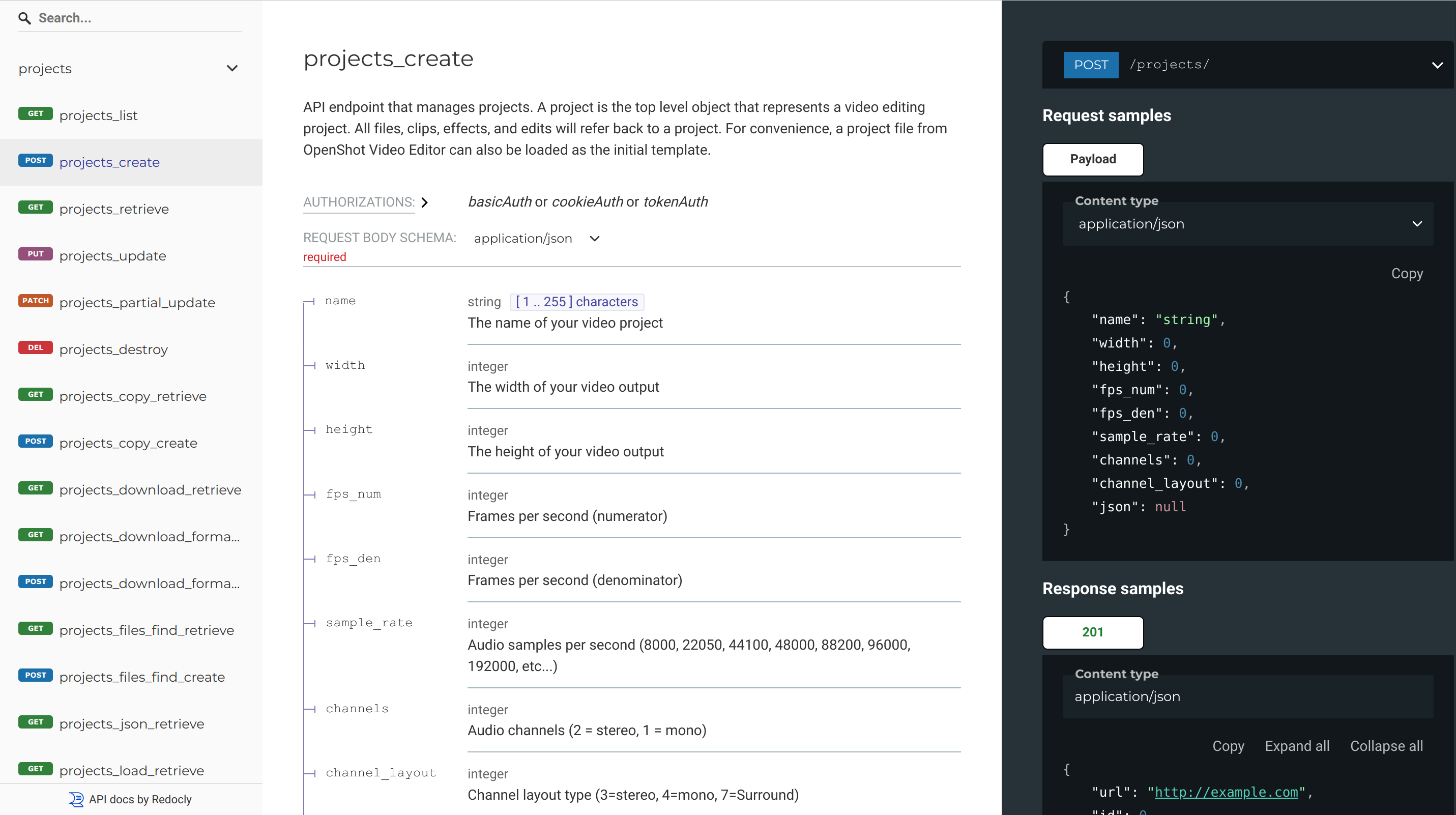

Swagger UI

Swagger UI (http://YourInstanceIP/swagger-ui/) allows you to visualize and interact with the API’s resources without having any of the implementation logic in place. This is similar to the above built-in demo, however it documents ALL available endpoints a bit more clearly than the built-in demo.

Redoc

Redoc (http://YourInstanceIP/redoc/) is an open source tool for generating documentation from OpenAPI definitions. By default Redoc offers a three-panel, responsive layout which includes search, documentation, and response examples. This is another great source to learn about ALL available endpoints.

/projects/

API endpoint that manages projects. A project is the top level object that represents a video editing project. All files, clips, effects, and edits will refer back to a project. For convenience, a project file from OpenShot Video Editor can also be loaded as the initial template.

See Schema Documentation for a more accurate schema definition for ALL endpoints.

{

"url": "http://cloud.openshot.org/projects/65/",

"id": 65,

"name": "Example Project",

"width": 1920,

"height": 1080,

"fps_num": 30,

"fps_den": 1,

"sample_rate": 44100,

"channels": 2,

"channel_layout": 3,

"files": [],

"clips": [],

"effects": [],

"exports": [],

"actions": [

"http://cloud.openshot.org/projects/65/load/",

"http://cloud.openshot.org/projects/65/files/",

"http://cloud.openshot.org/projects/65/files/find/",

"http://cloud.openshot.org/projects/65/clips/",

"http://cloud.openshot.org/projects/65/effects/",

"http://cloud.openshot.org/projects/65/exports/",

"http://cloud.openshot.org/projects/65/thumbnail/",

"http://cloud.openshot.org/projects/65/title/",

"http://cloud.openshot.org/projects/65/validate/",

"http://cloud.openshot.org/projects/65/json/"

],

"json": {},

"date_created": "2017-11-07T04:14:46.896016Z",

"date_updated": "2017-11-07T04:14:46.896048Z"

}

/projects/<id>/

API endpoint to manage a specific project.

Request Parameters:

Name |

Description |

|---|---|

name |

|

width |

|

height |

|

fps_num |

|

fps_den |

|

sample_rate |

|

channels |

|

channel_layout |

|

json |

|

Channel Layouts

Channel layouts control how the audio channels are arranged on an exported video project. For example, a STEREO layout would indicate 2 channels, that are arranged left and right. A 3.1 Layout would indicate 4 channels: front left, front center, front right, and subwoofer. For reference, here are all available channel layouts:

Name |

Value |

|---|---|

LAYOUT_MONO |

4 |

LAYOUT_STEREO |

3 |

LAYOUT_2POINT1 |

11 |

LAYOUT_2_1 |

259 |

LAYOUT_SURROUND |

7 |

LAYOUT_3POINT1 |

15 |

LAYOUT_4POINT0 |

263 |

LAYOUT_4POINT1 |

271 |

LAYOUT_2_2 |

1539 |

LAYOUT_QUAD |

51 |

LAYOUT_5POINT0 |

1543 |

LAYOUT_5POINT1 |

1551 |

LAYOUT_5POINT0_BACK |

55 |

LAYOUT_5POINT1_BACK |

63 |

LAYOUT_6POINT0 |

1799 |

LAYOUT_6POINT0_FRONT |

1731 |

LAYOUT_HEXAGONAL |

311 |

LAYOUT_6POINT1 |

1807 |

LAYOUT_6POINT1_BACK |

319 |

LAYOUT_6POINT1_FRONT |

1739 |

LAYOUT_7POINT0 |

1591 |

LAYOUT_7POINT0_FRONT |

1735 |

LAYOUT_7POINT1 |

1599 |

LAYOUT_7POINT1_WIDE |

1743 |

LAYOUT_7POINT1_WIDE_BACK |

255 |

LAYOUT_OCTAGONAL |

1847 |

LAYOUT_STEREO_DOWNMIX |

1610612736 |

{

"name": "Example Project",

"width": 1920,

"height": 1080,

"fps_num": 30,

"fps_den": 1,

"sample_rate": 44100,

"channels": 2,

"channel_layout": 3,

"json": "{}",

}

/projects/<id>/clips/

API endpoint to list and manage clips for a specific project.

/projects/<id>/copy/

API endpoint that copies/duplicates a project, including all File and Clip objects and all local file assets. A duplicated project can be deleted or changed without affecting the original project. This is especially useful for using another project as a template, including all it’s assets.

{

"name": "New Project Name"

}

/projects/<id>/download/

API endpoint that returns a ZIP archive of an entire project JSON and associated assets (returns binary zip file). This is useful for downloading an entire project for offline testing with OpenShot Video Editor (desktop editor). Only GET method is supported on this endpoint.

See /projects/<id>/load/ for uploading a ZIP archive of an entire cloud project.

/projects/<id>/download/format/

API endpoint that returns a different project format (EDL or XML), included in a ZIP archive of an entire project and associated assets (returns binary zip file). This is useful for downloading an entire project for offline editing using a non-OpenShot desktop editor. NOTE: EDL only supports a single layer (i.e .track) at a time, due to limitations of that format, and must be requested using the json::layer attribute.

Name |

Description |

|---|---|

edl |

Edit Decision List (compatible with most video editors, but has some limitations) |

xml |

Apple Final Cut Pro format (also can be imported into Adobe, and other editors) |

Only POST method is supported on this endpoint.

{

"format": "edl",

"json": "{\"layer\": 1}",

}

{

"format": "xml",

"json": "{}",

}

/projects/<id>/effects/

API endpoint to list and manage effects for a specific project.

/projects/<id>/exports/

API endpoint that exports your video project for rendering. Rendering converts your project into a final video file, which can then be accessed, downloaded, or deleted. See Export / Render.

/projects/<id>/files/

API endpoint to list and manage files for a specific project.

/projects/<id>/files/find/

API endpoint to return a list of matching File objects for a specific time range with an optional filename filter. Useful if you need to get just the files in a section of the timeline, or a file object with a specific name.

{

"position_in_seconds": 0.0,

"length_in_seconds": 10.0,

"filename_contains": ""

}

[

{

"media": "http://cloud.openshot.org/media/video/files/48/file1.JPG",

"download": "http://cloud.openshot.org/files/165/download/"

"url": "http://cloud.openshot.org/files/165/"

},

{

"media": "http://cloud.openshot.org/media/video/files/48/file2.mp4",

"download": "http://cloud.openshot.org/files/166/download/"

"url": "http://cloud.openshot.org/files/166/"

},

{

"media": "http://cloud.openshot.org/media/video/files/48/file3.mp4",

"download": "http://cloud.openshot.org/files/167/download/"

"url": "http://cloud.openshot.org/files/167/"

}

]

/projects/<id>/json/

API endpoint that outputs your OpenShot Cloud API project as an OpenShot Video Editor project file (JSON format). This is especially useful for testing and debugging your cloud project files. OpenShot Video Editor is our award-winning open-source video editor, and is compatible with Linux, Mac, and Windows.

/projects/<id>/load/

API endpoint that loads/initializes a project using an OpenShot Video Editor project file (JSON format). NOTE: This will remove all existing files, clips, and effects, and re-initialize the project to exactly match the project data you are loading. OpenShot Video Editor is our award-winning open-source desktop video editor, and is compatible with Linux, Mac, and Windows.

You can optionally upload a ZIP archive containing all media files used by a project, which is usually much faster than uploading each File object separately. The ZIP should contain all files used in the project (MP4, PNG, SVG, etc…), and also the OpenShot Project file (*.osp or *.json).

Also, you can combine these 2 approaches, and upload the project data JSON using the “json” field, and also attach a ZIP archive with all media files used by that project.

See /projects/<id>/download/ for downloading a ZIP archive of an entire cloud project.

{

"json": "{...}",

}

{

"archive": null

}

{

"json": "{ ... Project JSON ...}",

"archive": null

}

Create & Load ZIP Archives

Follow these steps to create a template project in desktop OpenShot Video Editor, export a *.zip of the project, and then load this *.zip into OpenShot Cloud API with a single endpoint. Download the latest version of desktop OpenShot Video Editor at https://www.openshot.org/download.

Organize Template Assets: Create a local folder (e.g.,

\Projects\Project-001\) to hold all your assets, such as images, video files, music, and SVG titles. Note: The OpenShot Cloud API does not support sub-folders, so keep all assets in a single folder.Arrange Clips in OpenShot: Drag the assets into OpenShot Video Editor and arrange them on the Timeline. The Timeline represents your final video, so order the clips as you want them to appear. Add animations, key-frames, and any other complex features you need in your template project.

Save the Project: Save the OpenShot project file (

*.osp) into the same local folder that holds your assets.Compress the Folder: Close OpenShot Video Editor, navigate to the folder in File Explorer, and create a compressed (zipped) folder: - Right-click the folder, select Send to, and then choose Compressed (zipped) folder. - Windows will create a ZIP file with the same name as the folder.

Upload the ZIP File: Use the /projects/<id>/load/ endpoint in the Cloud API to upload the ZIP file. This step creates all the File and Clip objects and loads the project into the Cloud API.

Make Adjustments in the Cloud API: Once loaded, use the Cloud API to modify the project as needed (e.g., replace files, edit SVG titles, or make other adjustments).

/projects/<id>/merge/

API endpoint that merges a project (clips & effects) into the current project. It accepts parameters for adjusting the position and layer offsets of all items together, or advanced JSON merge instructions, to automatically adjust the incoming merged clips & effects attributes (position, start, end, end-position, layer), for example, to snap the newly merged clips and effects to items in the current project (to prevent gaps or overlaps).

This endpoint is especially useful for combining projects as templates (or template sections), for example, merge in an intro project, different types of scene projects, and an outro project, and have them move all the clips/effects to bump up against each other as needed.

NOTE: Also of interest, each merged clip and effect retains it’s original ID (in a json attribute). For example, if Clip (ID: 123) is merged into a new project, the new Clip will get a new ID (but will retain a field called clip.json[“original_merge_id”]). This field is also used when evaluating advanced merge source and target attributes. This allows you to use consistent IDs which target clips and effects from different projects, which can simplify merging different scenes that are aware of each other.

Request Parameters:

Name |

Description |

|---|---|

position |

|

layer |

|

project |

|

json |

|

Advanced JSON Merge keys (example below):

Name |

Description |

|---|---|

source |

|

source_type |

|

source_attribute |

|

target |

|

target_type |

|

target_attribute |

|

offset |

|

[

{

"source": "all",

"source_type": "all",

"source_attribute": "position", # Adjust all clips/effect positions

"target": 680,

"target_type": "clip",

"target_attribute": "end-position", # ... to the end position of clip 680

"offset": -5 # ... and subtract 5 seconds from the position

},

{

"source": 682,

"source_type": "clip",

"source_attribute": "position", # Adjust position of only clip 682

"target": 679,

"target_type": "clip",

"target_attribute": "end-position", # ... to the end position of clip 679

"offset": 0

},

{

"source": 232,

"source_type": "effect",

"source_attribute": "position", # Adjust the position of only effect 232

"target": 229,

"target_type": "effect",

"target_attribute": "end-position", # ... to the end position of effect 229

"offset": 5 # ... and add 5 seconds to the position

}

]

/projects/<id>/storage/

API endpoint that calculates the file size of an entire project (including all File and Export objects). It can use the local filesystem, connected cloud storage, or the pre-calculated json file size values. All 3 backends return the same file size, but they each have their advantages. For example, cloud storage also calculates other objects stored in the same cloud path, for example, other files uploaded by your application. json does not require the file to exist on the API server for inspection. local is the default method, and iterates through local media files, and thus, requires the files exist on the API server.

Request Parameters:

Name |

Description |

|---|---|

backend |

|

{

"backend": "local",

}

{

"backend": "local",

"storage": 20696366

}

/projects/<id>/thumbnail/

API endpoint to return a thumbnail image of a specific project frame (returns binary image). Image formats supported: BMP, JPG, JPEG, PNG, PPM, XBM, XPM.

{

"frame_number": 1,

"width": 1920,

"height": 1080,

"image_format": "JPG",

"image_quality": 100

}

/projects/<id>/title/

API endpoint to create new SVG title File objects. It is a quick way to generate dynamic SVG titles, and automatically add them to your project’s File list. Templates are loaded from the /api/api_app/static/titles/ folder. See Titles / Text.

{

"template": "Center-Text.svg",

"text": "Text of title",

"font_size": 100.0,

"font_name": "Bitstream Vera Sans",

"fill_color": "#ffcc00",

"fill_opacity": 1.0,

"stroke_color": "#000000",

"stroke_size": 3.0,

"stroke_opacity": 1.0,

"drop_shadow": true,

"background_color": "#000000",

"background_opacity": 0.0

}

/projects/<id>/validate/

API endpoint that validates your current video project. It checks for missing file resources, missing properties, missing clips, and many other critical data points. Only valid projects can be rendered.

/files/

API endpoint that manages file objects. These can be images, videos, or audio files. File objects belong to both a project and a clip object. All assets used in your video must be added as file objects before they can be added as clips. Also, multiple clips can refer to a single file object.

File objects are rich with metadata, and include all kinds of interesting attributes about the file (and its content). Also, you will notice many technical attributes and embedded metadata (camera info, artist/album info, device rotation, etc…).

See Schema Documentation for a more accurate schema definition for ALL endpoints.

{

"media": null,

"project": "http://127.0.0.1:8001/projects/36/",

"json": "{}"

}

{

"media": null,

"project": "http://127.0.0.1:8001/projects/36/",

"json": "{\"name\": \"Custom-Filename.mp4\", \"url\": \"http://example/file/path.jpg\"}"

}

api/api/settings.py, and requires credentials.{

"media": null,

"project": "http://127.0.0.1:8001/projects/36/",

"json": "{ \"url\": \"my/S3/path/FILE.MP4\", \"bucket\": \"MyS3Bucket\", \"name\": \"Custom-Filename.mp4\" }"

}

api/api/settings.py, and requires credentials.{

"media": null,

"project": "http://127.0.0.1:8001/projects/36/",

"json": "{ \"url\": \"my/path/FILE.MP4\", \"container\": \"MyContainerName\", \"name\": \"Custom-Filename.mp4\" }"

}

{

"media": null,

"project": "http://127.0.0.1:8001/projects/36/",

"json": "{ \"url\": \"video/files/36/LOCAL-FILE.MP4\", \"name\": \"Custom-Filename.mp4\" }"

}

{

"url": "http://cloud.openshot.org/files/721/",

"id": 721,

"media": "http://cloud.openshot.org/media/video/files/292/sintel_trailer-720p.mp4",

"project": "http://cloud.openshot.org/projects/292/",

"actions": [

"http://cloud.openshot.org/files/721/thumbnail/"

],

"json": {

"channel_layout": 3,

"has_video": true,

"pixel_ratio": {

"num": 1,

"den": 1

},

"height": 720,

"audio_stream_index": 1,

"vcodec": "h264",

"top_field_first": true,

"video_stream_index": 0,

"file_size": "7610368",

"media_type": "video",

"interlaced_frame": false,

"has_audio": true,

"path": "/home/ubuntu/api/video/files/292/sintel_trailer-720p.mp4",

"fps": {

"num": 24,

"den": 1

},

"duration": 51.9466667175293,

"audio_bit_rate": 126694,

"acodec": "aac",

"type": "FFmpegReader",

"video_timebase": {

"num": 1,

"den": 24

},

"sample_rate": 48000,

"width": 1280,

"audio_timebase": {

"num": 1,

"den": 48000

},

"video_bit_rate": 1166138,

"has_single_image": false,

"display_ratio": {

"num": 16,

"den": 9

},

"metadata": {

"major_brand": "isom",

"artist": "Durian Open Movie Team",

"encoder": "Lavf52.62.0",

"copyright": "(c) copyright Blender Foundation | durian.blender.org",

"creation_time": "1970-01-01 00:00:00",

"minor_version": "512",

"language": "und",

"handler_name": "SoundHandler",

"compatible_brands": "isomiso2avc1mp41",

"title": "Sintel Trailer",

"description": "Trailer for the Sintel open movie project"

},

"video_length": "1253",

"pixel_format": 0,

"channels": 2

},

"date_created": "2018-02-25T22:03:22.323107Z",

"date_updated": "2018-02-25T22:03:22.589635Z"

}

{

"url": "http://cloud.openshot.org/files/719/",

"id": 719,

"media": "http://cloud.openshot.org/media/video/files/292/01_-_Universal_Fanfare_From__Pitch_Perfect_2__Soundtrack.mp3",

"project": "http://cloud.openshot.org/projects/292/",

"actions": [

"http://cloud.openshot.org/files/292/copy/",

"http://cloud.openshot.org/files/292/download/",

"http://cloud.openshot.org/files/292/text-replace/",

"http://cloud.openshot.org/files/292/thumbnail/"

],

"json": {

"interlaced_frame": false,

"height": 600,

"metadata": {

"album": "Pitch Perfect 2 (Original Motion Picture Soundtrack)",

"date": "2015",

"track": "1/18",

"artist": "Elizabeth Banks and John Michael Higgins",

"copyright": "(C) 2015 Universal Music Enterprises, a Division of UMG Recordings, Inc.",

"title": "Universal Fanfare (From \"Pitch Perfect 2\" Soundtrack)",

"comment": "Cover (front)",

"composer": "Jerry Goldsmith",

"genre": "Soundtracks",

"album_artist": "Various artists",

"disc": "1/1"

},

"width": 600,

"video_length": "793",

"duration": 33.044898986816406,

"pixel_format": 12,

"acodec": "mp3",

"video_bit_rate": 269082,

"path": "/home/ubuntu/api/video/files/292/01_-_Universal_Fanfare_From__Pitch_Perfect_2__Soundtrack.mp3",

"media_type": "video",

"audio_stream_index": 0,

"sample_rate": 44100,

"has_single_image": false,

"video_stream_index": 1,

"top_field_first": true,

"display_ratio": {

"den": 1,

"num": 1

},

"video_timebase": {

"den": 24,

"num": 1

},

"channels": 2,

"vcodec": "mjpeg",

"has_video": true,

"has_audio": true,

"fps": {

"den": 1,

"num": 24

},

"type": "FFmpegReader",

"audio_timebase": {

"den": 14112000,

"num": 1

},

"pixel_ratio": {

"den": 1,

"num": 1

},

"file_size": "1111476",

"channel_layout": 3,

"audio_bit_rate": 250729

},

"date_created": "2018-02-25T21:58:11.920072Z",

"date_updated": "2018-02-25T21:58:12.190887Z"

}

/files/<id>/

API endpoint to manage a specific file.

Request Parameters:

Name |

Description |

|---|---|

media |

|

project |

|

json |

|

file_data = {

"media": null,

"project": "http://cloud.openshot.org/projects/292/",

"json": "{}"

}

post(CLOUD_URL + end_point, data=file_data, files={"media": (source_name, open(source_path, "rb"))})

/files/<id>/copy/

API endpoint that copies/duplicates a file, including the local file asset. A duplicated file can be deleted or changed without affecting the original file. This is useful for duplicating SVG title files, and other text-based assets.

Request Parameters:

Name |

Description |

|---|---|

name |

|

project |

|

{

"name": "NewFileName.mp4",

"project": "123"

}

/files/<id>/download/

API endpoint that downloads a file with authentication. If you need secure access to your File media, this endpoint can be used to download the file securely, however the media folder is still exposed in Apache and will need to be disabled manually (for fully secure media).

To disable the Apache media path, edit /etc/apache2/sites-available/openshot.conf and remove the Alias /media /home/ubuntu/api/ line.

When you GET the file from this endpoint, it will return the correct content_type of the file and the actual file bytes. No JSON is returned. Using this endpoint is slower than directly accessing the media file with Apache. So you are trading security for speed.

/files/<id>/storage/

API endpoint that calculates the file size of a File object. It can use the local filesystem, connected cloud storage, or the pre-calculated json file size values. All 3 backends return the same file size, but they each have their advantages. For example, cloud storage also calculates other objects stored in the same cloud path, for example, other files uploaded by your application. json does not require the file to exist on the API server for inspection. local is the default method, and iterates through local media files, and thus, requires the files exist on the API server.

Request Parameters:

Name |

Description |

|---|---|

backend |

|

{

"backend": "local",

}

{

"backend": "local",

"storage": 20696366

}

/files/<id>/text-replace/

API endpoint for replacing text in text-based files, such as replacing words and phrases in an SVG file. This is especially useful for editing text in a template SVG file. See /files/<id>/copy/ endpoint for making a copy of an SVG file before replacing the text.

{

"search": "Find Me",

"replace": "Replacement"

}

/files/<id>/thumbnail/

API endpoint to return a thumbnail image of a specific file frame (returns binary image). Image formats supported: BMP, JPG, JPEG, PNG, PPM, XBM, XPM.

{

"frame_number": 1,

"width": 1920,

"height": 1080,

"image_format": "JPG",

"image_quality": 100

}

Alternatively, you can also use this endpoint via GET with no arguments. It will default to JPEG format, frame 60, and 720p size. It is designed as a fast way to implement thumbnails: GET /files/ID/thumbnail/ -> and a content-type of image/JPEG is returned. For example: <img src=”http://SERVER/files/12345/thumbnail/”>

/files/<id>/transcribe/

API endpoint to transcribe (and optionally translate to English) audio voice data. Powered by the Whisper AI model (from OpenAI): https://github.com/openai/whisper. The first time you use a specific model, there is an extra delay due to downloading the latest model files required by Whisper.

Models |

Description |

|---|---|

tiny |

Works great for English-only transcriptions (fastest - less accurate) |

base |

Works great for English-only transcriptions |

small |

Works well for translating non-English to English transcriptions |

medium |

Slower than small model, but more accurate when translating to English transcriptions |

large |

Most accurate when translating non-English languages to English (slowest) |

{

"model": "base",

"language": "",

"translate": false

}

{

"model": "small",

"language": "",

"translate": true

}

{

"model": "small",

"language": "",

"translate": true,

"args": {

"device": "cuda",

"fp16": true,

"compression_ratio_threshold": 2.4,

"logprob_threshold": -1.0,

"no_speech_threshold": 0.6,

"condition_on_previous_text": true

}

}

/clips/

API endpoint that manages clips. A clip is a container for a file object, located on a specific layer, and at a specific position (in seconds) on the timeline. Clips can also manipulate their source file, by adjusting transparency, location, rotation, shearing, and scale. Most properties on a clip can also be animated, by adjusting the values over time, and choosing an interpolation type (linear, bezier, or constant). See Animation for more info on keyframes and animating.

See Schema Documentation for a more accurate schema definition for ALL endpoints.

Common Properties |

Description |

|---|---|

alpha |

Alpha transparency (0=visible to 1=invisible) |

scale_x |

Width as a percentage (0 to 1) |

scale_y |

Height as a percentage (0 to 1) |

location_x |

Left edge as a percentage (0 to 1) |

location_y |

Top edge as a percentage (0 to 1) |

rotation |

Rotation as degrees (0 to 360) |

volume |

Volume as a percentage (0 to 1) |

scale |

Scale mode (0=Crop, 1=Fit, 2=Stretch, 3=None) |

gravity |

Alignment (0=Top Left, 1=Top, 2=Top Right, 3=Left, 4=Center, 5=Right, 6=Bottom Left, 7=Bottom, 8=Bottom Right) |

has_audio |

Include audio stream (0 or 1) |

has_video |

Include video stream (0 or 1) |

time |

Curve representing frames (fast, slow, forward, backwards controls) |

{

"url": "http://cloud.openshot.org/clips/87/",

"file": "http://cloud.openshot.org/files/91/",

"id": 87,

"position": 0.0,

"start": 0.0,

"end": 10.0,

"layer": 1,

"actions": [

"http://cloud.openshot.org/clips/87/presets/",

"http://cloud.openshot.org/clips/87/keyframes/",

"http://cloud.openshot.org/clips/87/reset/"

],

"project": "http://cloud.openshot.org/projects/65/",

"json": {

"anchor": 0,

"channel_filter": {

"Points": [

{

"co": {

"Y": -1,

"X": 1

},

"interpolation": 2

}

]

},

"waveform": false,

"scale_x": {

"Points": [

{

"co": {

"Y": 1,

"X": 1

},

"interpolation": 2

}

]

},

"has_video": {

"Points": [

{

"co": {

"Y": -1,

"X": 1

},

"interpolation": 2

}

]

},

"has_audio": {

"Points": [

{

"co": {

"Y": -1,

"X": 1

},

"interpolation": 2

}

]

},

"location_y": {

"Points": [

{

"co": {

"Y": 0,

"X": 1

},

"interpolation": 2

}

]

},

"alpha": {

"Points": [

{

"co": {

"Y": 1,

"X": 1

},

"interpolation": 2

}

]

},

"image": "thumbnail/91.png",

"shear_x": {

"Points": [

{

"co": {

"Y": 0,

"X": 1

},

"interpolation": 2

}

]

},

"shear_y": {

"Points": [

{

"co": {

"Y": 0,

"X": 1

},

"interpolation": 2

}

]

},

"rotation": {

"Points": [

{

"co": {

"Y": 0,

"X": 1

},

"interpolation": 2

}

]

},

"reader": {

"has_audio": true,

"height": 720,

"sample_rate": 48000,

"pixel_format": 0,

"interlaced_frame": false,

"video_length": "1253",

"has_single_image": false,

"media_type": "video",

"path": "/home/ubuntu/api/video/files/65/sintel_trailer-720p.mp4",

"channels": 2,

"top_field_first": true,

"audio_bit_rate": 126694,

"width": 1280,

"display_ratio": {

"num": 16,

"den": 9

},

"file_size": "7610368",

"duration": 51.9466667175293,

"video_timebase": {

"num": 1,

"den": 24

},

"vcodec": "h264",

"audio_timebase": {

"num": 1,

"den": 48000

},

"pixel_ratio": {

"num": 1,

"den": 1

},

"channel_layout": 3,

"has_video": true,

"video_bit_rate": 1166138,

"video_stream_index": 0,

"type": "FFmpegReader",

"fps": {

"num": 24,

"den": 1

},

"audio_stream_index": 1,

"acodec": "aac"

},

"scale": 1,

"channel_mapping": {

"Points": [

{

"co": {

"Y": -1,

"X": 1

},

"interpolation": 2

}

]

},

"gravity": 4,

"scale_y": {

"Points": [

{

"co": {

"Y": 1,

"X": 1

},

"interpolation": 2

}

]

},

"volume": {

"Points": [

{

"co": {

"Y": 1,

"X": 1

},

"interpolation": 2

}

]

},

"title": "sintel_trailer-720p.mp4",

"wave_color": {

"blue": {

"Points": [

{

"handle_type": 0,

"handle_right": {

"Y": 0,

"X": 0.5

},

"handle_left": {

"Y": 1,

"X": 0.5

},

"co": {

"Y": 255,

"X": 1

},

"interpolation": 0

}

]

},

"alpha": {

"Points": [

{

"handle_type": 0,

"handle_right": {

"Y": 0,

"X": 0.5

},

"handle_left": {

"Y": 1,

"X": 0.5

},

"co": {

"Y": 255,

"X": 1

},

"interpolation": 0

}

]

},

"green": {

"Points": [

{

"handle_type": 0,

"handle_right": {

"Y": 0,

"X": 0.5

},

"handle_left": {

"Y": 1,

"X": 0.5

},

"co": {

"Y": 123,

"X": 1

},

"interpolation": 0

}

]

},

"red": {

"Points": [

{

"handle_type": 0,

"handle_right": {

"Y": 0,

"X": 0.5

},

"handle_left": {

"Y": 1,

"X": 0.5

},

"co": {

"Y": 0,

"X": 1

},

"interpolation": 0

}

]

}

},

"display": 0,

"time": {

"Points": [

{

"co": {

"Y": 1,

"X": 1

},

"interpolation": 2

}

]

},

"location_x": {

"Points": [

{

"co": {

"Y": 0,

"X": 1

},

"interpolation": 2

}

]

}

},

"date_created": "2017-11-07T04:24:25.632357Z",

"date_updated": "2017-11-07T04:24:25.635518Z"

}

/clips/<id>/

API endpoint to manage a specific clip.

Request Parameters:

Name |

Description |

|---|---|

position |

|

start |

|

end |

|

layer |

|

file |

|

project |

|

json |

|

{

"file": "http://cloud.openshot.org/files/421/",

"position": 0.0,

"start": 0.0,

"end": 10.0,

"layer": 1,

"project": "http://cloud.openshot.org/projects/147/",

"json": "{}"

}

/clips/<id>/keyframes/

API endpoint to create custom keyframes for any clip property which supports keyframes and animation. See Animation.

{

"property": "alpha",

"position_in_seconds": 3.0,

"curve": "Linear",

"value": "1.0",

}

/clips/<id>/presets/

API endpoint to apply a variety of common keyframe presets to a clip, including fading in/out, scaling in/out, rotation, etc… See Animation.

{

"preset": "Fade In",

"length_in_seconds": 3.0,

"curve": "Ease (Default)"

}

/clips/<id>/reset/

API endpoint to reset keyframes for common clip properties (such as alpha, scale, location, and rotation). See Animation.

/clips/<id>/captions/

API endpoint to append transcription / caption text to a clip using the Caption effect (added to the Clip’s JSON). This will update an existing Caption effect (if found), or create a new Caption effect (if not found). You can manually add VTT/Subrip formatted caption text and time-codes, or import the related File object’s previous transcription data.

For example, if you have previously used the /files/<id>/transcribe/ endpoint to generate VTT/Subrip formatted caption text, you can import that data automatically using this endpoint - so the caption text will be rendered on top of this clip. You can import a specific language code, or leave file_transcript_language blank and it will use the first language found.

{

"caption_text": "00:00.000 --> 00:07.000\nCaption text to be displayed between 0 and 7 seconds.",

"append_file_transcript": false,

"file_transcript_language": ""

}

{

"caption_text": "",

"append_file_transcript": true,

"file_transcript_language": "en"

}

/effects/

API endpoint that manages effects. An effect manipulates the image or audio of a frame, and is located on a specific layer, and at a specific position (in seconds) on the timeline. Most properties on an effect can also be animated, by adjusting the values over time, and choosing an interpolation type (linear, bezier, or constant). For details on all available effects, see /info/effects/.

See Schema Documentation for a more accurate schema definition for ALL endpoints.

{

"url": "http://cloud.openshot.org/effects/16/",

"id": 16,

"title": "Transition",

"type": "Mask",

"position": 0.0,

"start": 0.0,

"end": 10.0,

"layer": 1,

"project": "http://cloud.openshot.org/projects/78/",

"json": {

"name": "Alpha Mask / Wipe Transition",

"brightness": {

"Points": []

},

"contrast": {

"Points": []

},

"short_name": "Mask",

"description": "Uses a grayscale mask image to gradually wipe / transition between 2 images.",

"reader": {},

"class_name": "Mask",

"replace_image": false,

"has_audio": false,

"has_video": true,

"order": 0

},

"date_created": "2017-11-07T04:26:48.353239Z",

"date_updated": "2017-11-07T04:26:48.379193Z"

}

/effects/<id>/

API endpoint to manage a specific effect.

Request Parameters:

Name |

Description |

|---|---|

position |

|

start |

|

end |

|

layer |

|

title |

|

type |

|

project |

|

json |

|

{

"title": "Negative Effect",

"type": "Negate",

"position": 0.0,

"start": 0.0,

"end": 10.0,

"layer": 1,

"project": "http://cloud.openshot.org/projects/147/",

"json": "{}"

}

/exports/

API endpoint that manages video rendering exports. An export is a final rendered output of a video project, which converts all clips, transitions, and effects into a final output file (or files). An Export can be a video, audio, image, image sequence, or waveform. See Export / Render. See Frame Numbers for more information on calculating frame numbers (i.e. start_frame, end_frame).

See Schema Documentation for a more accurate schema definition for ALL endpoints.

{

"url": "http://cloud.openshot.org/exports/1/",

"id": 1,

"output": "http://cloud.openshot.org/media/video/output/1/output-1-1-2a40f424.mp4",

"export_type": "video",

"video_format": "mp4",

"video_codec": "libx264",

"video_bitrate": 8000000,

"audio_codec": "ac3",

"audio_bitrate": 1920000,

"start_frame": 1,

"end_frame": 301,

"actions": [

"http://cloud.openshot.org/exports/1/retry/"

],

"project": "http://cloud.openshot.org/projects/1/",

"webhook": "http://myserver.com/video-export/",

"json": { },

"progress": 100.0,

"status": "completed",

"date_created": "2017-11-07T07:21:33.397002Z",

"date_updated": "2017-11-07T07:40:26.458371Z"

}

By default an export uses the parent project settings for width, height, FPS (frames per second), sample_rate,

channels, and channel_layout. But all these attributes can be overwritten on any Export, by using the json attribute,

for example:

"json": "{ \"height\": 640, \"width\": 480, \"fps\": {\"num\": 30, \"den\": 1} }"

Exports also support a webhook, which is an optional, custom URL that is requested once an export has succeeded or

failed. The Export data is sent as an application/json POST, and includes "json": { "status": "success", "detail": "Optional error message if something broke" }.

Optionally, you can set "json": { "webhook-form-urlencoded": true} to return application/x-www-form-urlencoded

formatted data (which is included for legacy support). The response data from your webhook request is also saved

in the export record: "json": { "webhook": "200: [application/json] Your webhook response data" }.

{

"url": "http://cloud.openshot.org/exports/42/",

"id": 42,

"output": "http://cloud.openshot.org/media/video/output/70/output-70-42-1385f06a.mp4",

"export_type": "video",

"video_format": "mp4",

"video_codec": "libx264",

"video_bitrate": 8000000,

"audio_codec": "libfdk_aac",

"audio_bitrate": 1920000,

"start_frame": 1,

"end_frame": 91,

"actions": [

"http://cloud.openshot.org/exports/42/download/",

"http://cloud.openshot.org/exports/42/retry/",

"http://cloud.openshot.org/exports/42/storage/"

],

"project": "http://cloud.openshot.org/projects/70/",

"webhook": "http://MY-SERVER.com/video-export/",

"json": {

"hostname": "private-IP-instance",

"worker_stats": {

"cpu": 36.4,

"memory": 26.7

},

"error": "Optional error message will be here if something goes wrong",

"webhook": "200: [application/json] Response body from your webhook will be saved here (after the webhook is made)"

},

"progress": 100.0,

"status": "completed",

"date_created": "2021-09-24T04:06:46.547766Z",

"date_updated": "2021-09-24T04:07:33.384356Z"

}

Exports can automatically upload your completed output file to Amazon S3 (which must be configured with config-openshot-cloud

command first or manually in api/api/settings.py, and requires credentials). The Export data is sent as a POST, including the following

"json": "{ "url": \"my/S3/path/FILE.MP4\", \"bucket\": \"MyS3Bucket\", \"acl\": \"public-read\" }".

{

"export_type": "video",

"video_format": "mp4",

"video_codec": "libx264",

"video_bitrate": 8000000,

"audio_codec": "ac3",

"audio_bitrate": 1920000,

"start_frame": 1,

"end_frame": 301,

"project": "http://cloud.openshot.org/projects/1/",

"webhook": "",

"json": "{

\"bucket\": \"MyS3Bucket\",

\"url\": \"my/S3/path/FILE.MP4\",

\"acl\": \"public-read\"

}"

}

Exports can also automatically upload your completed output file to Azure Blob Storage (which must be configured with config-openshot-cloud command first

or manually in api/api/settings.py, and requires credentials). The Export data is sent as a POST, including the following

"json": "{ \"url\": \"my/path/FILE.MP4\", \"container\": \"MyBlobContainer\", \"public\": \"blob\" }".

{

"export_type": "video",

"video_format": "mp4",

"video_codec": "libx264",

"video_bitrate": 8000000,

"audio_codec": "ac3",

"audio_bitrate": 1920000,

"start_frame": 1,

"end_frame": 301,

"project": "http://cloud.openshot.org/projects/1/",

"webhook": "",

"json": "{

\"container\": \"MyBlobContainer\",

\"url\": \"my/path/FILE.MP4\",

\"public\": \"blob\"

}"

}

/exports/<id>/

API endpoint to manage a specific export.

Request Parameters:

Name |

Description |

|---|---|

output |

|

export_type |

|

video_format |

|

video_codec |

|

video_bitrate |

|

audio_codec |

|

audio_bitrate |

|

start_frame |

|

end_frame |

|

webhook |

|

json |

|

Export Status:

Name |

Description |

|---|---|

pending |

|

in-progress |

|

completed |

|

failed |

|

cancel |

|

{

"video_format": "mp4",

"video_codec": "libx264",

"video_bitrate": "8.0 Mb/sec",

"audio_codec": "ac3",

"audio_bitrate": "192 kb/sec",

"start_frame": 1,

"end_frame": -1,

"project": "http://cloud.openshot.org/projects/147/",

"json": "{}"

}

/exports/<id>/download/

API endpoint that downloads the Export output file with authentication. If you need secure access to your Export output, this endpoint can be used to download the file securely, however the media folder is still exposed in Apache and will need to be disabled manually (for fully secure media).

To disable the Apache media path, edit /etc/apache2/sites-available/openshot.conf and remove the Alias /media /home/ubuntu/api/ line.

When you GET the file from this endpoint, it will return the correct content_type of the file and the actual file bytes. No JSON is returned. Using this endpoint is slower than directly accessing the media file with Apache. So you are trading security for speed.

/exports/<id>/retry/

API endpoint to retry a failed export. Sometimes exports can fail while being processed on a worker. This endpoint simply re-queues the export for processing again.

If any export fails due to a hard-crash, pending for too long (i.e. 45+ minutes), or cloud scaling termination, a scheduled cron job will auto-retry the export 1 time. This can be very useful on infrequent issues, such as an auto-scaling instance shutting down during an export render, or an out-of-memory hard-crash.

It is best practice to watch for failed status messages, and handle retry logic yourself. Each time a /retry/ happens, we increment an export.json.retries variable, to help you keep track of prior attempts:

{

"retries": 1

}

/exports/<id>/cancel/

API endpoint to cancel a pending or in-progress export. If the project is already completed or failed, this endpoint will throw an APIException. Once an export is canceled, it will set the status to failed, and send out a failed webhook.

/info/

API endpoint that lists information in JSON format, such as installed fonts and lists of effects and effect properties.

See Schema Documentation for a more accurate schema definition for ALL endpoints.

/info/fonts/

API endpoint to GET all available/installed font families and styles.

If you POST to this endpoint, it will clear the font cache and reload font data. You can also POST a font_file, which will add a new font to the system, and update the font cache.

{

"font_file": null

}

/info/fonts/thumbnail/

API endpoint to get a large JPEG thumbnail of all available fonts (useful for debugging font issues)

/info/effects/

API endpoint to list all effects and effect attributes/settings.